An important aspect of business processes is human task management. While some of

the work performed in a process can be executed automatically, some tasks need to be

executed by human actors. jBPM supports a special human task node inside processes for

modeling this interaction with human users. This human task node allows process designers

to define the properties related to the task that the human actor needs to execute, like

for example the type of task, the actor(s), the data associated with the task, etc.

jBPM also includes a so-called human task service, a back-end service that manages the

life cycle of these tasks at runtime. This implementation is based on the WS-HumanTask

specification. Note however that this implementation is fully pluggable, meaning that

users can integrate their own human task solution if necessary.

To have human actors participate in your processes, you first need to (1) include

human task nodes inside your process to model the interaction with human actors,

(2) integrate a task management component (like for example the WS-HumanTask based

implementation provided by jBPM) and (3) have end users interact with a human task

client to request their task list and claim and complete the tasks assigned to them.

Each of these three elements will be discussed in more detail in the next sections.

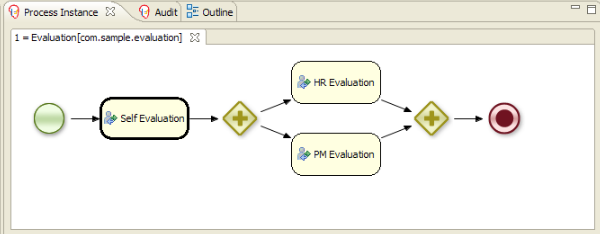

12.1. Human tasks inside processes

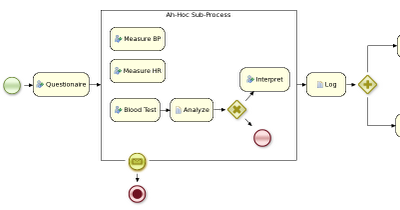

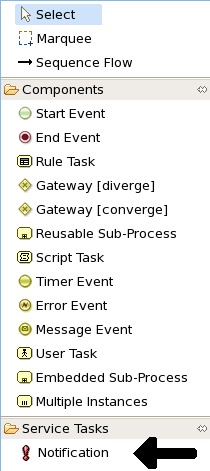

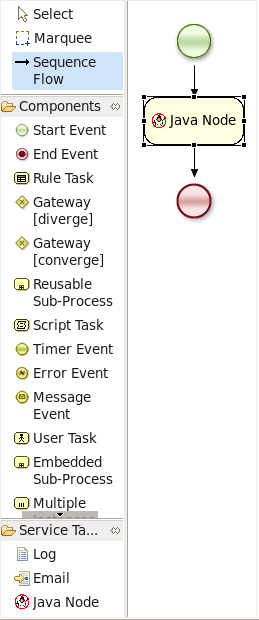

jBPM supports the use of human tasks inside processes using a special user task

node (as shown in the figure above). A user task node represents an atomic task that

needs to be executed by a human actor. [Although jBPM has a special user task node for

including human tasks inside a process, human tasks are considered the same as any other

kind of external service that needs to be invoked and are therefore simply implemented

as a domain-specific service. Check out the chapter on domain-specific services to

learn more about how to register your own domain-specific services.]

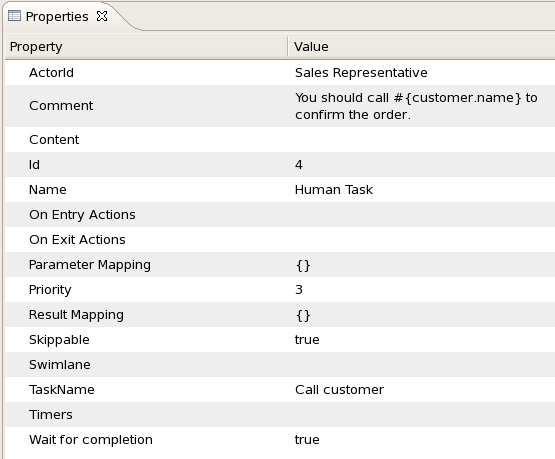

A user task node contains the following properties:

- Id: The id of the node (which is unique

within one node container).

- Name: The display name of the node.

- TaskName: The name of the human task.

- Priority: An integer indicating the priority

of the human task.

- Comment: A comment associated with the human

task.

- ActorId: The actor id that is responsible for

executing the human task. A list of actor id's can be specified using

a comma (',') as separator.

- GroupId: The group id that is responsible for

executing the human task. A list of group id's can be specified using

a comma (',') as separator.

- Skippable: Specifies whether the human task

can be skipped, i.e., whether the actor may decide not to execute the

task.

- Content: The data associated with this task.

- Swimlane: The swimlane this human task node

is part of. Swimlanes make it easy to assign multiple human tasks to

the same actor. See the human tasks chapter for more detail on how to

use swimlanes.

- On entry and on exit actions: Action scripts

that are executed upon entry and exit of this node, respectively.

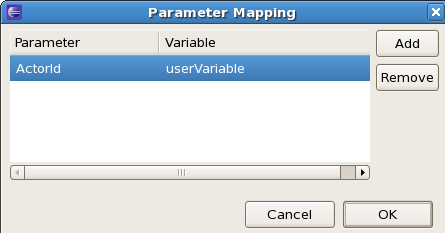

- Parameter mapping: Allows copying the value

of process variables to parameters of the human task. Upon creation of

the human tasks, the values will be copied.

- Result mapping: Allows copying the value

of result parameters of the human task to a process variable. Upon

completion of the human task, the values will be copied. A human task

has a result variable "Result" that contains

the data returned by the human actor. The variable "ActorId" contains

the id of the actor that actually executed the task.

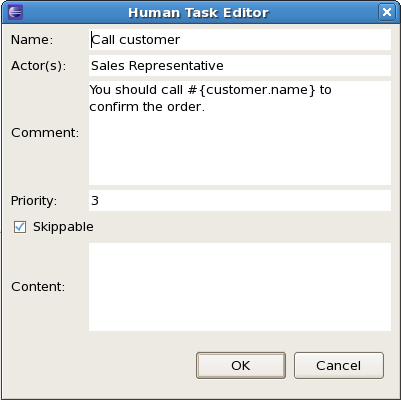

You can edit these variables in the properties view (see below) when selecting

the user task node, or the most important properties can also be edited by

double-clicking the user task node, after which a custom user task node editor is

opened, as shown below as well.

In many cases, the parameters of a user task (like for example the task name,

actorId, priority, etc.) can be defined when creating the process. You simply fill in

value of these properties in the property editor. It is however likely that some

of the properties of the human task are dependent on some data related to the process

instance this task is being requested in. For example, if a business process is used

to model how to handle incoming sales requests, tasks that are assigned to a sales

representative could include information related to that specific sales request, like

its unique id, the name of the customer that requested it, etc. You can make your

human task properties dynamic in two ways:

- #{expression}: Task parameters of type String can use #{expression} to embed the value of

the given expression in the String. For example, the comment related to a task

might be "Please review this request from user #{user}", where user is a variable

in the process. At runtime, #{user} will be replaced by the actual user name for

that specific process instance. The value of #{expression} will be resolved when

creating human task and the #{...} will be replaced by the toString() value of the

value it resolves to. The expression could simply be the name of a variable (in

which case it will be resolved to the value of the variable), but more advanced

MVEL expressions are possible as well, like for example #{person.name.firstname}.

Note that this approach can only be used for String parameters. Other parameters

should use parameter mapping to map a value to that parameter.

- Parameter mapping: You can map the value of a process variable (or a

value derived from a variable) to a task parameter. For example, if you need to

assign a task to a user whose id is a variable in your process, you can do so

by mapping that variable to the parameter ActorId, as shown in the following

screenshot. [Note that, for parameters of type String, this would be identical

to specifying the ActorId using #{userVariable}, so it would probably be easier

to use #{expression} in this case, but parameter mapping also allow you to assign

a value to properties that are not of type String.]

12.1.1. User and group assignment

Tasks can be assigned to one specific user. In that case, the task will show

up on the task list of that specific user only. If a task is assigned to more than

one user, any of those users can claim and execute this task.

Tasks can also be assigned to one or more groups. This means that any user

that is part of the group can claim and execute the task. For more information on

how user and group management is handled in the default human task service, check

out the user and group assignment.

Human tasks typically present some data related to the task that needs to be

performed to the actor that is executing the task and usually also request the actor

to provide some result data related to the execution of the task. Task forms are

typically used to present this data to the actor and request results.

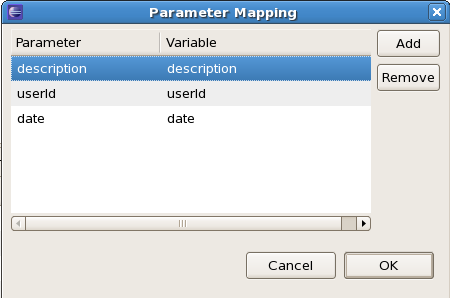

12.1.2.1. Task parameters

Data that needs to be displayed in a task form should be passed to the task,

using parameter mapping. Parameter mapping allows you to copy the value of a

process variable to a task parameter (as described above). This could for example

be the customer name that needs to be displayed in the task form, the actual request,

etc. To copy data to the task, simply map the variable to a task parameter. This

parameter will then be accessible in the task form (as shown later, when describing

how to create task forms).

For example, the following human task (as part of the humantask example in

jbpm-examples) is assigned to a sales representative that needs to decide whether

to accept or reject a request from a customer. Therefore, it copies the following

process variables to the task as task parameters: the userId (of the customer doing

the request), the description (of the request), and the date (of the request).

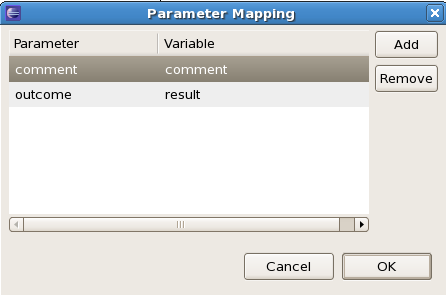

Data that needs to be returned to the process should be mapped from the

task back into process variables, using result mapping. Result mapping allows you

to copy the value of a task result to a process variable (as described above).

This could for example be some data that the actor filled in. To copy a task

result to a process variable, simply map the task result parameter to the variable

in the result mapping. The value of the task result will then be copied after

completion of the task so it can be used in the remainder of the process.

For example, the following human task (as part of the humantask example in

jbpm-examples) is assigned to a sales representative that needs to decide whether

to accept or reject a request from a customer. Therefore, it copies the following

task results back to the process: the outcome (the decision that the sales

representative has made regarding this request, in this case "Accept" or "Reject")

and the comment (the justification why).

User tasks can be used in combination with swimlanes to assign multiple human tasks

to the same actor. Whenever the first task in a swimlane is created, and that task

has an actorId specified, that actorId will be assigned to (all other tasks of) that

swimlane as well. Note that this would override the actorId of subsequent tasks in

that swimlane (if specified), so only the actorId of the first human task in a swimlane

will be taken into account, all others will then take the actorId as assigned in the

first one.

Whenever a human task that is part of a swimlane is completed, the actorId of that swimlane is

set to the actorId that executed that human task. This allows for example to assign a human task to

a group of users, and to assign future tasks of that swimlame to the user that claimed the first task.

This will also automatically change the assignment of tasks if at some point one of the tasks is

reassigned to another user.

To add a human task to a swimlane, simply specify the name of the swimlane as the value of the

"Swimlane" parameter of the user task node. A process must also define all the swimlanes that it contains.

To do so, open the process properties by clicking on the background of the process and click on the

"Swimlanes" property. You can add new swimlanes there.

The new BPMN2 Eclipse editor will support a visual representation of swimlanes

(as horizontal lanes), so that it will be possible to define a human task as part of

a swimlane simply by dropping the task in that lane on the process model.

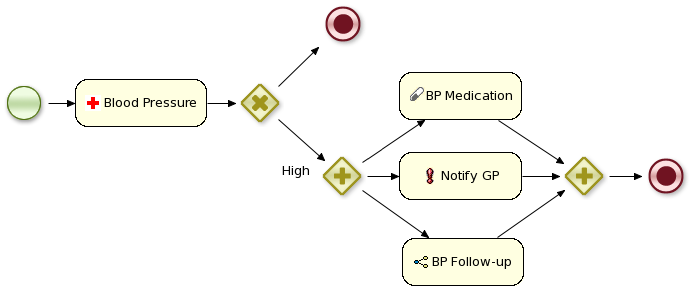

The jbpm-examples module has some examples that show human tasks in action, like

the evaluation example and the humantask example. These examples show some of the

more advanced features in action, like for example group assignment, data passing in

and out of human tasks, swimlanes, etc. Be sure to take a look at them for more

details and a working example.

As far as the jBPM engine is concerned, human tasks are similar to any other external

service that needs to be invoked and are implemented as a domain-specific service. Check

out the chapter on domain-specific services for more detail on how to include a domain-

specific service in your process. Because a human task is an example of such a domain-

specific service, the process itself contains a high-level, abstract description of the

human task that need to be executed, and a work item handler is responsible for binding

this abstract tasks to a specific implementation. Using our pluggable work item handler

approach, users can plug in the human task service that is provided by jBPM, as descrived

below, or they may register their own implementation.

The jBPM project provide a default implementation of a human task service based on the

WS-HumanTask specification. If you do not have the requirement to integrate an existing human

task service, you can use this service. It manages the life cycle of the tasks (creation,

claiming, completion, etc.) and stores the state of all the tasks, task lists, etc. It also

supports features like internationalization, calendar integration, different types of

assignments, delegation, deadlines, etc. It is implemented as part of the jbpm-human-task

module.

The task service implementation is based on the WS-HumanTask (WS-HT) specification.

This specification defines (in detail) the model of the tasks, the life cycle, and a lot

of other features as the ones mentioned above. It is pretty comprehensive and can be found

here.

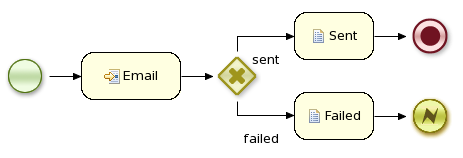

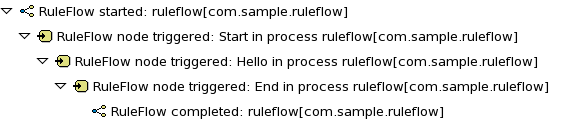

Looking from the perspective of the process, whenever a user task node

is triggered during the execution of a process instance, a human task is created.

The process will only leave that node when that human task has been completed

or aborted.

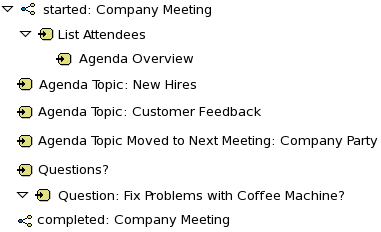

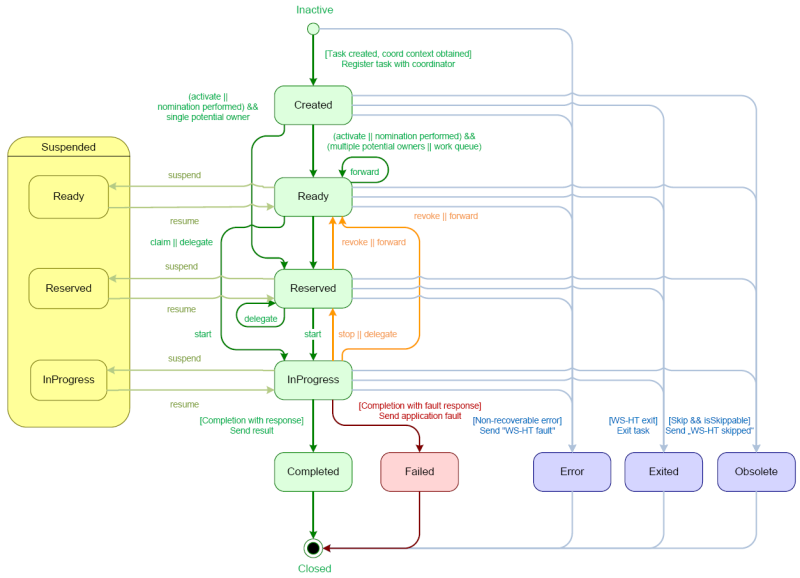

The human task itself usually has a complete life cycle itself as well. We

will now shortly introduce this life cycle, as shown in the figure below. For more

details, check out the WS-HumanTask specification.

Whenever a task is created, it starts in the "Created" stage. It

usually automatically transfers to the

"Ready" state, at which point the task will show up on the task list of

all the actors that are allowed to execute

the task. There, it is waiting for one of these actors to claim the

task, indicating that he or she will be

executing the task. Once a user has claimed a task, the status is changed

to "Reserved". Note that a task that

only has one potential actor will automatically be assigned to that actor

upon creation of that task. After claiming

the task, that user can then at some point decide to start executing the

task, in which case the task status is

changed to "InProgress". Finally, once the task has been performed, the

user must complete the task (and can specify

the result data related to the task), in which case the status is changed

to "Completed". If the task could not be

completed, the user can also indicate this using a fault response (possibly

with fault data associated), in which

case the status is changed to "Failed".

The life cycle explained above is the normal life cycle. The service

also allows a lot of other life cycle methods, like:

- Delegating or forwarding a task, in which case it is assigned

to another actor

- Revoking a task, so it is no longer claimed by one specific

actor but reappears on the task list of

all potential actors

- Temporarly suspending and resuming a task

- Stopping a task in progress

- Skipping a task (if the task has been marked as skippable),

in which case the task will not be executed

12.2.2. Linking the human task service to the jBPM engine

The human task service needs to be integrated with the jBPM engine just like

any other external service, by registering a work item handler that is responsible

for translating the abstract work item (in this case a human task) to a specific

invocation of a service. We have implemented this work item handler

(org.jbpm.process.workitem.wsht.WSHumanTaskHandler in the jbpm-human-task module),

so you can register this work item handler like this:

StatefulKnowledgeSession ksession = ...;

ksession.getWorkItemManager().registerWorkItemHandler("Human Task",

new WSHumanTaskHandler());

If you are using persistence, you should use the CommandBasedWSHumanTaskHandler

instead (org.jbpm.process.workitem.wsht.CommandBasedWSHumanTaskHandler in the

jbpm-human-task module), like this:

StatefulKnowledgeSession ksession = ...;

ksession.getWorkItemManager().registerWorkItemHandler("Human Task",

new CommandBasedWSHumanTaskHandler());

By default, this handler will connect to the human task service on the local

machine on port 9123. You can easily change the address and port of the human task

service that should be used by by invoking the setConnection(ipAddress, port)

method on the WSHumanTaskHandler.

The communication between the human task service and the process engine,

or any task client, is done using messages being sent between the client and the

server. The implementation allows different transport mechanisms being plugged

in, but by default, Mina

(http://mina.apache.org/) is used

for client/server communication. An alternative implementation using HornetQ

is also available.

12.2.3. Interacting with the human task service

The human task service exposes various methods to manage the life cycle of

the tasks through a Java API. This allows clients to integrate (at a low level)

with the human task service. Note that end users should probably will not interact

with this low-level API directly but rather use one of the more user-friendly task

clients (see below) that offer a graphical user interface to request task lists,

claim and complete tasks, etc. These task clients internally interact with the

human task service using this API as well. But the low-level API is also available

for developers to interact with the human task service directly.

A task client (class org.jbpm.task.service.TaskClient) offers the following

methods for managing the life cycle of human tasks:

public void start( long taskId, String userId,

TaskOperationResponseHandler responseHandler )

public void stop( long taskId, String userId,

TaskOperationResponseHandler responseHandler )

public void release( long taskId, String userId,

TaskOperationResponseHandler responseHandler )

public void suspend( long taskId, String userId,

TaskOperationResponseHandler responseHandler )

public void resume( long taskId, String userId,

TaskOperationResponseHandler responseHandler )

public void skip( long taskId, String userId,

TaskOperationResponseHandler responseHandler )

public void delegate( long taskId, String userId, String targetUserId,

TaskOperationResponseHandler responseHandler )

public void complete( long taskId, String userId, ContentData outputData,

TaskOperationResponseHandler responseHandler )

...

If you take a look a the method signatures you will notice that almost all of

these methods take the following arguments:

- taskId:

The id of the task that we are working with. This is usually extracted from the

currently selected task in the user task list in the user interface.

- userId: The id of the user

that is executing the action. This is usually the id of the user that is

logged in into the application.

- responseHandler: Communication with

the task service is asynchronous, so you should use a response handler

that will be notified when the results are available.

When you invoke a message on the TaskClient, a message is created that

will be sent to the server, and the server will execute the logic that

implements the correct action.

The following code sample shows how to create a task client and interact with

the task service to create, start and complete a task.

TaskClient client = new TaskClient(new MinaTaskClientConnector("client 1",

new MinaTaskClientHandler(SystemEventListenerFactory.getSystemEventListener())));

client.connect("127.0.0.1", 9123);

// adding a task

BlockingAddTaskResponseHandler addTaskResponseHandler =

new BlockingAddTaskResponseHandler();

Task task = ...;

client.addTask( task, null, addTaskResponseHandler );

long taskId = addTaskResponseHandler.getTaskId();

// getting tasks for user "bobba"

BlockingTaskSummaryResponseHandler taskSummaryResponseHandler =

new BlockingTaskSummaryResponseHandler();

client.getTasksAssignedAsPotentialOwner("bobba", "en-UK",

taskSummaryResponseHandler);

List<TaskSummary> tasks = taskSummaryResponseHandler.getResults();

// starting a task

BlockingTaskOperationResponseHandler responseHandler =

new BlockingTaskOperationResponseHandler();

client.start( taskId, "bobba", responseHandler );

responseHandler.waitTillDone(1000);

// completing a task

responseHandler = new BlockingTaskOperationResponseHandler();

client.complete( taskId, "bobba".getId(), null, responseHandler );

responseHandler.waitTillDone(1000);

12.2.4. User and group assignment

Tasks can be assigned to one specific user. In that case, the task will show

up on the task list of that specific user only. If a task is assigned to more than

one user, any of those users can claim and execute this task. Tasks can also be

assigned to one or more groups. This means that any user that is part of the group

can claim and execute the task.

The human task service needs to know what all the possible valid user and group

ids are (to make sure tasks are assigned to existing users and/or groups to avoid

errors and tasks that end up assigned to non-existing users). You need to make sure

to register all users and groups before tasks can be assigned to them. This can be

done dynamically.

EntityManagerFactory emf = Persistence.createEntityManagerFactory("org.jbpm.task");

TaskService taskService = new TaskService(emf,

SystemEventListenerFactory.getSystemEventListener());

TaskServiceSession taskSession = taskService.createSession();

// now register new users and groups

taskSession.addUser(new User("krisv"));

taskSession.addGroup(new Group("developers"));

The human task service itself does not maintain the relationship between users

and groups. This is considered outside the scope of the human task service, as in

general businesses already have existing services that contain this information (like

for example an LDAP service). Therefore, the human task service also offers you

to specify the list of groups that a user is part of, so this information can also

be taken into account when for example requesting the task list or claiming a task.

For example, if a task is assigned to the group "sales" and the user "sales-rep"

that is part of that group wants to claim that task, he should pass the fact that he

is part of that group when requesting the list of tasks that he is assigned to as

potential owner:

List<String> groups = new ArrayList<String>();

groups.add("sales");

taskClient.getTasksAssignedAsPotentialOwner("sales-rep", groups, "en-UK",

taskSummaryHandler);

The WS-HumanTask specification also introduces the role of an administrator.

An administrator can manipulate the life cycle of the task, even though he might not

be assigned as a potential owner of that task. By default, jBPM registers a special

user with userId "Administrator" as the administrator of each task. You should

therefor make sure that you always define at least a user "Adminstrator" when

registering the list of valid users at the task service.

Future versions of jBPM will provide a callback interface that will simplify

the user and group management. This interface will allow you to validate users

and groups without having to register them all at the task service, and provide

a method that you can implement to dynamically resolve the groups a user is part

of (for example by contacting an existing service like LDAP). Users will then be

able to simply register their implementation of this callback interface without

having to provide the list of groupIds the user is part of for all relevent method

invocations.

12.2.5. Starting the human task service

The human task service is a completely independent service

that the process engine communicates with. We therefore recommend to

start it as a separate service as well. The installer contains a command

to start the task server (in this case using Mina as transport protocol),

or you can use the following code fragment:

EntityManagerFactory emf = Persistence.createEntityManagerFactory("org.jbpm.task");

TaskService taskService = new TaskService(emf,

SystemEventListenerFactory.getSystemEventListener());

MinaTaskServer server = new MinaTaskServer( taskService );

Thread thread = new Thread( server );

thread.start();

The task management component uses the Java Persistence API (JPA) to

store all task information in a persistent manner. To configure the

persistence, you need to modify the persistence.xml configuration file

accordingly. We refer to the JPA documentation on how to do that. The

following fragment shows for example how to use the task management component

with hibernate and an in-memory H2 database:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<persistence

version="1.0"

xsi:schemaLocation=

"http://java.sun.com/xml/ns/persistence

http://java.sun.com/xml/ns/persistence/persistence_1_0.xsd

http://java.sun.com/xml/ns/persistence/orm

http://java.sun.com/xml/ns/persistence/orm_1_0.xsd"

xmlns:orm="http://java.sun.com/xml/ns/persistence/orm"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns="http://java.sun.com/xml/ns/persistence">

<persistence-unit name="org.jbpm.task">

<provider>org.hibernate.ejb.HibernatePersistence</provider>

<class>org.jbpm.task.Attachment</class>

<class>org.jbpm.task.Content</class>

<class>org.jbpm.task.BooleanExpression</class>

<class>org.jbpm.task.Comment</class>

<class>org.jbpm.task.Deadline</class>

<class>org.jbpm.task.Comment</class>

<class>org.jbpm.task.Deadline</class>

<class>org.jbpm.task.Delegation</class>

<class>org.jbpm.task.Escalation</class>

<class>org.jbpm.task.Group</class>

<class>org.jbpm.task.I18NText</class>

<class>org.jbpm.task.Notification</class>

<class>org.jbpm.task.EmailNotification</class>

<class>org.jbpm.task.EmailNotificationHeader</class>

<class>org.jbpm.task.PeopleAssignments</class>

<class>org.jbpm.task.Reassignment</class>

<class>org.jbpm.task.Status</class>

<class>org.jbpm.task.Task</class>

<class>org.jbpm.task.TaskData</class>

<class>org.jbpm.task.SubTasksStrategy</class>

<class>org.jbpm.task.OnParentAbortAllSubTasksEndStrategy</class>

<class>org.jbpm.task.OnAllSubTasksEndParentEndStrategy</class>

<class>org.jbpm.task.User</class>

<properties>

<property name="hibernate.dialect" value="org.hibernate.dialect.H2Dialect"/>

<property name="hibernate.connection.driver_class" value="org.h2.Driver"/>

<property name="hibernate.connection.url" value="jdbc:h2:mem:mydb" />

<property name="hibernate.connection.username" value="sa"/>

<property name="hibernate.connection.password" value="sasa"/>

<property name="hibernate.connection.autocommit" value="false" />

<property name="hibernate.max_fetch_depth" value="3"/>

<property name="hibernate.hbm2ddl.auto" value="create" />

<property name="hibernate.show_sql" value="true" />

</properties>

</persistence-unit>

</persistence>

The first time you start the task management component, you need to

make sure that all the necessary users and groups are added to the database.

Our implementation requires all users and groups to be predefined before

trying to assign a task to that user or group. So you need to make sure

you add the necessary users and group to the database using the

taskSession.addUser(user) and taskSession.addGroup(group) methods. Note

that you at least need an "Administrator" user as all tasks are

automatically assigned to this user as the administrator role.

The jbpm-human-task module contains a org.jbpm.task.RunTaskService

class in the src/test/java source folder that can be used to start a task server.

It automatically adds users and groups as defined in LoadUsers.mvel and

LoadGroups.mvel configuration files.

The jBPM installer automatically starts a human task service (using an

in-memory H2 database) as a separate Java application. This task service is defined

in the task-service directory in the jbpm-installer folder. You can register

new users and task by modifying the LoadUsers.mvel and LoadGroups.mvel scripts

in the resources directory.

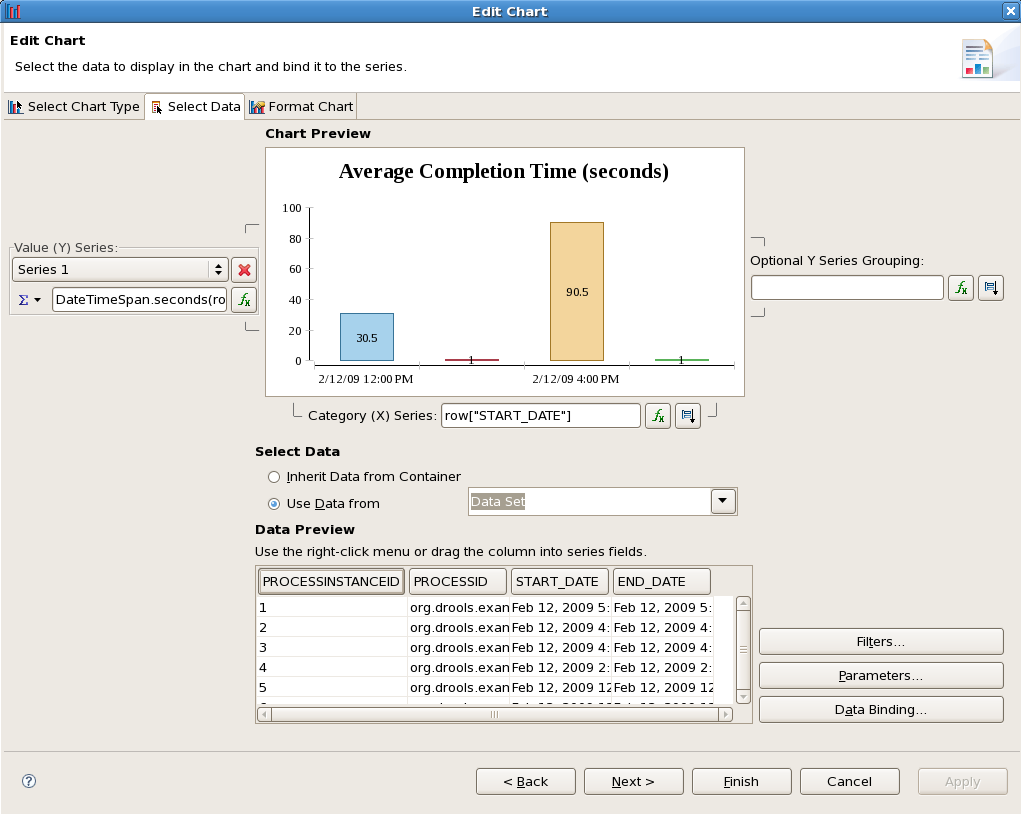

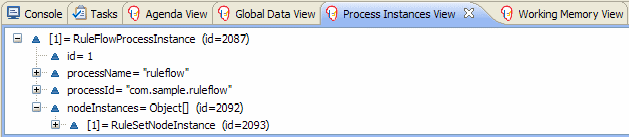

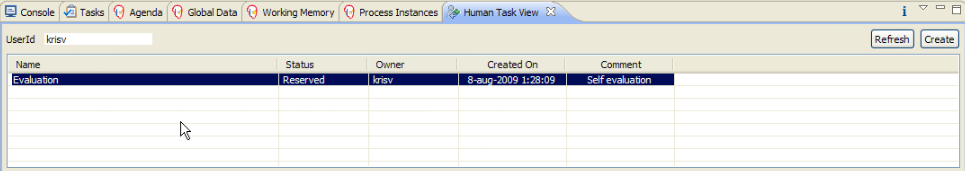

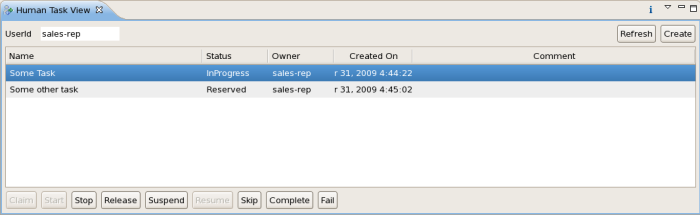

12.3.1. Eclipse demo task client

The Drools IDE contains a org.drools.eclipse.task plugin that allows you to test and/or debug

processes using human tasks. In contains a Human Task View that can connect to a running task

management component, request the relevant tasks for a particular user (i.e. the tasks where the user

is either a potential owner or the tasks that the user already claimed and is executing). The

life cycle of these tasks can then be executed, i.e. claiming or releasing a task, starting or

stopping the execution of a task, completing a task, etc. A screenshot of this Human Task View is

shown below. You can configure which task management component to connect to in the Drools Task

preference page (select Window -> Preferences and select Drools Task). Here you can specify the

url and port (default = 127.0.0.1:9123).

Notice that this task client only supports a (small) sub-set of the features

provided the human task service. But in general this is sufficient to do some

initial testing and debugging or demoing inside the Eclipse IDE.

12.3.2. Web-based task client in jBPM Console

The jBPM console also contains a task view for looking up task lists and managing

the life cycle of tasks, task forms to complete the tasks, etc. See the chapter on the

jBPM console for more information.

12.4.0 Connecting Human Task server to LDAP

jBPM comes with a dedicated UserGroupCallback implementation for LDAP servers that allows task server to retrieve user and group/role information directly from LDAP. To be able to use this callback it must be configured according to specifics of LDAP server and its structure to collect proper information.

LDAP UserGroupCallback properties

- ldap.bind.user : username used to connect to the LDAP server (optional if LDAP server accepts anonymous access)

- ldap.bind.pwd : password used to connect to the LDAP server(optional if LDAP server accepts anonymous access)

- ldap.user.ctx : context in LDAP that will be used when searching for user information (mandatory)

- ldap.role.ctx : context in LDAP that will be used when searching for group/role information (mandatory)

- ldap.user.roles.ctx : context in LDAP that will be used when searching for user group/role membership information (optional, if not given ldap.role.ctx will be used)

- ldap.user.filter : filter that will be used to search for user information, usually will contain substitution keys {0} to be replaced with parameters (mandatory)

- ldap.role.filter : filter that will be used to search for group/role information, usually will contain substitution keys {0} to be replaced with parameters (mandatory)

- ldap.user.roles.filter : filter that will be used to search for user group/role membership information, usually will contain substitution keys {0} to be replaced with parameters (mandatory)

- ldap.user.attr.id : attribute name of the user id in LDAP (optional, if not given 'uid' will be used)

- ldap.roles.attr.id : attribute name of the group/role id in LDAP (optional, if not given 'cn' will be used)

- ldap.user.id.dn : is user id a DN, instructs the callback to query for user DN before searching for roles (optional, default false)

- java.naming.factory.initial : initial conntext factory class name (default com.sun.jndi.ldap.LdapCtxFactory)

- java.naming.security.authentication : authentication type (none, simple, strong where simple is default one)

- java.naming.security.protocol : specifies security protocol to be used, for instance ssl

- java.naming.provider.url : LDAP url to be used default is ldap://localhost:389, or if protocol is set to ssl ldap://localhost:636

Depending on how human task server is started LDAP callback can be configured in two ways:

- programatically - build property object with all required attributes and register new callback

Properties properties = new Properties();

properties.setProperty(LDAPUserGroupCallbackImpl.USER_CTX, "ou=People,dc=my-domain,dc=com");

properties.setProperty(LDAPUserGroupCallbackImpl.ROLE_CTX, "ou=Roles,dc=my-domain,dc=com");

properties.setProperty(LDAPUserGroupCallbackImpl.USER_ROLES_CTX, "ou=Roles,dc=my-domain,dc=com");

properties.setProperty(LDAPUserGroupCallbackImpl.USER_FILTER, "(uid={0})");

properties.setProperty(LDAPUserGroupCallbackImpl.ROLE_FILTER, "(cn={0})");

properties.setProperty(LDAPUserGroupCallbackImpl.USER_ROLES_FILTER, "(member={0})");

UserGroupCallback ldapUserGroupCallback = new LDAPUserGroupCallbackImpl(properties);

UserGroupCallbackManager.getInstance().setCallback(ldapUserGroupCallback);

- declaratively - create property file (jbpm.usergroup.callback.properties) with all required attributes, place it on the root of the classpath and declare LDAP callback to be registered (see section Starting the human task server for deatils). Alternatively, location of jbpm.usergroup.callback.properties can be specified via system property -Djbpm.usergroup.callback.properties=FILE_LOCATION_ON_CLASSPATH

#ldap.bind.user=

#ldap.bind.pwd=

ldap.user.ctx=ou\=People,dc\=my-domain,dc\=com

ldap.role.ctx=ou\=Roles,dc\=my-domain,dc\=com

ldap.user.roles.ctx=ou\=Roles,dc\=my-domain,dc\=com

ldap.user.filter=(uid\={0})

ldap.role.filter=(cn\={0})

ldap.user.roles.filter=(member\={0})

#ldap.user.attr.id=

#ldap.roles.attr.id=

12.4.1. Configure escalation and notifications

To allow Task Server to perform escalations and notification a bit of configuration is required. Most of the configuration is for notification support as it relies on external system (mail server) but as they are handled by EscalatedDeadlineHandler implementation so configuration apply to both.

// configure email service

Properties emailProperties = new Properties();

emailProperties.setProperty("from", "jbpm@domain.com");

emailProperties.setProperty("replyTo", "jbpm@domain.com");

emailProperties.setProperty("mail.smtp.host", "localhost");

emailProperties.setProperty("mail.smtp.port", "2345");

// configure default UserInfo

Properties userInfoProperties = new Properties();

// : separated values for each org entity email:locale:display-name

userInfoProperties.setProperty("john", "john@domain.com:en-UK:John");

userInfoProperties.setProperty("mike", "mike@domain.com:en-UK:Mike");

userInfoProperties.setProperty("Administrator", "admin@domain.com:en-UK:Admin");

// build escalation handler

DefaultEscalatedDeadlineHandler handler = new DefaultEscalatedDeadlineHandler(emailProperties);

// set user info on the escalation handler

handler.setUserInfo(new DefaultUserInfo(userInfoProperties));

EntityManagerFactory emf = Persistence.createEntityManagerFactory("org.jbpm.task");

// when building TaskService provide escalation handler as argument

TaskService taskService = new TaskService(emf, SystemEventListenerFactory.getSystemEventListener(), handler);

MinaTaskServer server = new MinaTaskServer( taskService );

Thread thread = new Thread( server );

thread.start();

Note that default implementation of UserInfo is just for demo purposes to have a fully operational task server. Custom user info classes can be provided that implement following interface:

public interface UserInfo {

String getDisplayName(OrganizationalEntity entity);

Iterator<OrganizationalEntity> getMembersForGroup(Group group);

boolean hasEmail(Group group);

String getEmailForEntity(OrganizationalEntity entity);

String getLanguageForEntity(OrganizationalEntity entity);

}

If you are using the jBPM installer, just drop your property files into

$jbpm-installer-dir$/task-service/resources/org/jbpm/, make sure that they are named email.properties and userinfo.properties.

For more information follow my Tutorial online @ http://jbpmmaster.blogspot.com/